gem5 添加epoll支持

起因

本周希望给我们新设计的gem5 x86微码测试正确性(gem5 原本的微码写的不太好,和真实x86微码有较大差异,前人也有相关研究 gem5_Haswell),之前已经能对部分x86程序能正确运行了,例如hello, coremark, 部分spec 2000程序,但是很多spec2017程序都还不能运行,所以希望先进行完备的单元测试,然后再对spec 2017继续debug, 逐步迭代。

找到了龙芯公司内部的一个用于LATX 二进制 翻译测试代码,其中用到了很多酷炫的库,例如google gRPC, 是一个高性能的Remote Procedure Call (RPC) 库,使用strace 发现使用了epoll相关的syscall,如下:

server使用的接口

epoll_create1(EPOLL_CLOEXEC) = 3

epoll_ctl(3, EPOLL_CTL_ADD, 4, {events=EPOLLIN|EPOLLET, data={u32=19081032, u64=19081032}}) = 0

epoll_ctl(3, EPOLL_CTL_ADD, 5, {events=EPOLLIN|EPOLLOUT|EPOLLET, data={u32=436067248, u64=140218233379760}}) = 0

client 使用的接口

epoll_create1(EPOLL_CLOEXEC) = 3

epoll_ctl(3, EPOLL_CTL_ADD, 4, {events=EPOLLIN|EPOLLET, data={u32=15475432, u64=15475432}}) = 0

导致gem5 在运行这个多线程程序时候报错,invalid syscall

查看gem5源码发现没有对epoll syscall的支持,所以我需要在gem5中添加epoll 的支持

epoll是啥

首先需要学习下select, poll, epoll是啥!

先看《UNIX 环境高级编程》中,对select, poll 都进行了很系统的讲解,也有对应的测试程序,这里也不再赘述。

看看epoll 的文章:

man epoll 这里对具体的参数进行了说明

测试程序

使用chatgpt 帮我生成了一些关于epoll的简单测试程序(还有关于select, poll的测试就不放上来了)

对标注输入进行监听,一旦有输入就唤醒,然后输出、

#include <asm-generic/errno-base.h>

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <sys/epoll.h>

#include <fcntl.h>

#define MAX_EVENTS 10

int main() {

int epoll_fd, ready_fd_count;

struct epoll_event event, events[MAX_EVENTS];

// 创建 epoll 实例

epoll_fd = epoll_create1(0);

// epoll_fd = epoll_create1(EPOLL_CLOEXEC); // fork exec后子进程不会继承epoll_fd

if (epoll_fd == -1) {

perror("epoll_create1");

return 1;

}

printf("epoll_fd = %d\n", epoll_fd);

// 监听标准输入(文件描述符为 0)

int fd = 0;

event.events = EPOLLIN;

event.data.fd = 0; // 标准输入的文件描述符为 0

if (epoll_ctl(epoll_fd, EPOLL_CTL_ADD, fd, &event) == -1) {

perror("epoll_ctl");

return 1;

}

ENOTTY

while (1) {

ready_fd_count = epoll_wait(epoll_fd, events, MAX_EVENTS, 5000);

if (ready_fd_count == -1) {

perror("epoll_wait");

return 1;

}

printf("ready_fd_count = %d\n", ready_fd_count);

// 处理就绪的事件

for (int i = 0; i < ready_fd_count; ++i) {

if (events[i].data.fd == 0) {

printf("有数据可读!\n");

// 读取并处理标准输入的数据

char buffer[1024];

ssize_t num_read = read(0, buffer, sizeof(buffer));

if (num_read == -1) {

perror("read");

return 1;

}

if (num_read == 0) {

printf("已到达文件末尾,退出程序。\n");

close(epoll_fd);

return 0;

}

printf("读取到 %ld 个字节:%.*s", num_read, (int)num_read, buffer);

}

}

}

close(epoll_fd);

return 0;

}

使用静态链接编译(gem5 对动态链接的程序,支持起来要麻烦很多!)

gcc --static epoll.c -o epoll

这里主要使用了以下三个syscall, 能把这三个添加好就好

epoll_create1

epoll_ctl

epoll_wait

看看gem5 对于poll的实现

代码在/src/sim/syscall_emul.hh

无非做了以下几件事

-

解析参数,其中int nfds, int tmout可以直接使用,struct pollfd 需要使用copyIn 来传递

-

维护fd 映射关系,这里给出了几个实例:

不同视角 标准输入 tmp.txt普通文件 tmp2.txt普通文件 程序视角:guest/target 0 3 4 gem5视角:host/sim 0 7 8

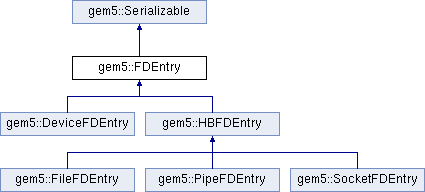

由于gem5和上面跑的程序是共享一个进程的,gem5自己还会额外使用几个文件,所以会维护并保证程序视角下,它看不到gem5自己使用的fd, 保证程序透明性(不知道自己在gem5上运行)。这个维护关系使用FDEntry来做,

gem5 同时使用allocFD 来申请sim_fd, getSimFD 来查询sim_fd

- 直接调用本机的syscall,

status = poll((struct pollfd *)fdsBuf.bufferPtr(), nfds, 0); - 把参数传递回程序用户空间,copyOut, 类似linux kernel的copyTo user space

template <class OS>

SyscallReturn

pollFunc(SyscallDesc *desc, ThreadContext *tc,

VPtr<> fdsPtr, int nfds, int tmout)

{

auto p = tc->getProcessPtr();

BufferArg fdsBuf(fdsPtr, sizeof(struct pollfd) * nfds);

fdsBuf.copyIn(SETranslatingPortProxy(tc)); // pollfd 数组, 从fdsPtr copy

// 存储一下guest/target fd, 后面需要替换成gem5的host fd,然后传递给poll syscall

int temp_tgt_fds[nfds]; // 暂存target fd

for (int index = 0; index < nfds; index++) {

temp_tgt_fds[index] = ((struct pollfd *)fdsBuf.bufferPtr())[index].fd;

auto tgt_fd = temp_tgt_fds[index];

auto hbfdp = std::dynamic_pointer_cast<HBFDEntry>((*p->fds)[tgt_fd]);

if (!hbfdp) // 获取到gem5维护的host fd, 放在p->fds 数组中FDArray, 何时放进去的?open 放进去的

return -EBADF;

auto host_fd = hbfdp->getSimFD(); // target=3, sim/host=7

((struct pollfd *)fdsBuf.bufferPtr())[index].fd = host_fd; // 替换为host fd

}

status = poll((struct pollfd *)fdsBuf.bufferPtr(), nfds, 0);

if (status == -1)

return -errno;

/**

* Replace each host_fd in the returned poll_fd array with its original

* target file descriptor.

*/

for (int index = 0; index < nfds; index++) {

auto tgt_fd = temp_tgt_fds[index];

((struct pollfd *)fdsBuf.bufferPtr())[index].fd = tgt_fd; // 替换为guest fd

}

/**

* Copy out the pollfd struct because the host may have updated fields

* in the structure.

*/

fdsBuf.copyOut(SETranslatingPortProxy(tc));

return status;

}

添加epoll支持

看明白了poll的支持,再结合其他的syscall 关于fd 的处理,就能添加epoll的支持了

在src/arch/x86/linux/syscall_tbl64.cc 中添加

{ 232, "epoll_wait", epoll_waitFunc<X86Linux64> },

{ 233, "epoll_ctl", epoll_ctlFunc<X86Linux64> },

{ 291, "epoll_create1", epoll_create1Func<X86Linux64> },

{ 318, "getrandom", ignoreFunc},

{ 334, "rseq", ignoreFunc } // 由于gcc 版本过高,需要忽略一下

- epoll_create1

template <typename OS>

SyscallReturn

epoll_create1Func(SyscallDesc *desc, ThreadContext *tc, int flags)

{

// int flags可以直接使用,但是epoll_create1()的返回值fd需要转换

auto p = tc->getProcessPtr();

int epoll_fd = epoll_create1(flags);

if (epoll_fd == -1)

return -errno;

bool close_on_exec = flags & O_CLOEXEC;

// alloc a new FileFDEntry

auto sim_hbfdp = std::make_shared<FileFDEntry>(epoll_fd, flags, "epoll fd", 0, close_on_exec);

int tgt_fd = p->fds->allocFD(sim_hbfdp);

DPRINTF_SYSCALL(Verbose, "epoll_create1: created tgt_fd=%d, epoll_fd=%d\n", tgt_fd, epoll_fd);

return tgt_fd;

}

- epoll_ctl

template <typename OS>

SyscallReturn

epoll_ctlFunc(SyscallDesc *desc, ThreadContext *tc, int epfd, int op,

int fd, VPtr<> event_ptr)

{

// 可以直接使用op; fd, epfd要从fds获取

auto p = tc->getProcessPtr();

// get sim_fd, sim_epfd

auto epfdp = std::dynamic_pointer_cast<FileFDEntry>((*p->fds)[epfd]);

if (!epfdp)

return -EBADF;

int sim_epfd = epfdp->getSimFD();

auto fd1 = std::dynamic_pointer_cast<HBFDEntry>((*p->fds)[fd]);

if (!fd1)

return -EBADF;

int sim_fd = fd1->getSimFD();

DPRINTF_SYSCALL(Verbose, "epoll_ctl: epfd=%d, sim_epfd=%d\n", epfd, sim_epfd);

DPRINTF_SYSCALL(Verbose, "epoll_ctl: fd=%d, sim_fd=%d\n", fd, sim_fd);

// get event

struct epoll_event event; // event_ptr -> event_buf -> event

BufferArg event_buf(event_ptr, sizeof(struct epoll_event));

event_buf.copyIn(SETranslatingPortProxy(tc));

memcpy(&event, event_buf.bufferPtr(), sizeof(struct epoll_event));

int result = epoll_ctl(sim_epfd, op, sim_fd, &event);

return (result == -1) ? -errno : result;

}

- epoll_wait

template <typename OS>

SyscallReturn

epoll_waitFunc(SyscallDesc *desc, ThreadContext *tc, int epfd,

VPtr<> events_ptr, int maxevents, int timeout)

{

// get sim_epfd

auto p = tc->getProcessPtr();

auto epfdp = std::dynamic_pointer_cast<FileFDEntry>((*p->fds)[epfd]);

if (!epfdp)

return -EBADF;

int sim_epfd = epfdp->getSimFD();

DPRINTF_SYSCALL(Verbose, "epoll_wait: epfd=%d, sim_epfd=%d\n", epfd, sim_epfd);

// get events

struct epoll_event events[maxevents]; // events_ptr -> events_buf -> events

BufferArg events_buf(events_ptr, sizeof(struct epoll_event) * maxevents);

events_buf.copyIn(SETranslatingPortProxy(tc));

memcpy(&events, events_buf.bufferPtr(), sizeof(struct epoll_event) * maxevents);

if (timeout < 0) {

// timeout < 0, epoll_wait() will block indefinitely

// so we need to set timeout to 0

timeout = 0;

}

int result = epoll_wait(sim_epfd, events, maxevents, timeout);

if (result == -1) {

perror("epoll_wait failed");

exit(1);

}

// copy events back to events_ptr

memcpy(events_buf.bufferPtr(), &events, sizeof(struct epoll_event) * maxevents);

events_buf.copyOut(SETranslatingPortProxy(tc));

return (result == -1) ? -errno : result;

}

运行测试程序

添加SyscallVerbose 的debug支持

./build/X86/gem5.debug --debug-flag=SyscallVerbose configs/example/se.py --cpu-type O3CPU --caches -c /home/yanyue/workspace/test/c_test/apue/14/epoll

运行结果如下

gem5 Simulator System. http://gem5.org

gem5 is copyrighted software; use the --copyright option for details.

gem5 version 21.2.1.1

gem5 compiled Jul 10 2023 11:30:18

gem5 started Jul 10 2023 19:23:18

gem5 executing on yanyue-Precision-3660, pid 161136

command line: ./build/X86/gem5.debug --debug-flag=SyscallVerbose configs/example/se.py --cpu-type O3CPU --caches -c /home/yanyue/workspace/test/c_test/apue/14/epoll

Global frequency set at 1000000000000 ticks per second

warn: No dot file generated. Please install pydot to generate the dot file and pdf.

build/X86/mem/mem_interface.cc:791: warn: DRAM device capacity (8192 Mbytes) does not match the address range assigned (512 Mbytes)

0: system.cpu: Constructing CPU with id 0, socket id 0

0: system.remote_gdb: listening for remote gdb on port 7000

**** REAL SIMULATION ****

build/X86/sim/simulate.cc:194: info: Entering event queue @ 0. Starting simulation...

build/X86/arch/x86/cpuid.cc:180: warn: x86 cpuid family 0x0000: unimplemented function 13

build/X86/arch/x86/cpuid.cc:180: warn: x86 cpuid family 0x0000: unimplemented function 20

build/X86/arch/x86/cpuid.cc:180: warn: x86 cpuid family 0x0000: unimplemented function 25

8911500: system.cpu: T0 : syscall brk: break point changed to: 0X4CEDC0

build/X86/sim/syscall_emul.cc:74: warn: ignoring syscall set_robust_list(...)

build/X86/sim/syscall_emul.cc:74: warn: ignoring syscall rseq(...)

build/X86/sim/mem_state.cc:443: info: Increasing stack size by one page.

build/X86/sim/syscall_emul.cc:74: warn: ignoring syscall getrandom(...)

16815000: system.cpu: T0 : syscall brk: break point changed to: 0X4EFDC0

17121000: system.cpu: T0 : syscall brk: break point changed to: 0X4F0000

build/X86/sim/syscall_emul.cc:74: warn: ignoring syscall mprotect(...)

28908000: system.cpu: T0 : syscall epoll_create1: created tgt_fd=3, epoll_fd=7

34683000: system.cpu: T0 : syscall epoll_ctl: epfd=3, sim_epfd=7

34683000: system.cpu: T0 : syscall epoll_ctl: fd=0, sim_fd=0

35033500: system.cpu: T0 : syscall epoll_wait: epfd=3, sim_epfd=7

asdf

37972000: system.cpu: T0 : syscall epoll_wait: epfd=3, sim_epfd=7

asdf

38860000: system.cpu: T0 : syscall epoll_wait: epfd=3, sim_epfd=7

asd^Cepoll_wait failed: Interrupted system call

能发现对于fd 的映射维护都是正确的,也能正确执行epoll syscall了

总结

本来做之前对于添加epoll 有很大的畏难情绪,但是在谢本壹师兄的鼓励下还是上手做这件事情了。

其实挺简单的,总共学习epoll花了快1天,真正写代码加调试就花了不到一天,很快就做完了,唯一的麻烦点就在于对于fd的映射。

可惜的是,目前仍然没能成功运行那个复杂的测试程序,因为有出现了新的问题

460424000: system.cpu: T0 : syscall clone: no spare thread context in system[cpu 0, thread 0]495834000: system.cpu: T0 : syscall mmap range is 0x7ffff7f6d000 - 0x7ffff7f6dfff

build/X86/sim/mem_state.cc:443: info: Increasing stack size by one page.

607038500: system.cpu: T0 : syscall open: failed -> path:/home/yanyue/workspace/mut/rawGem5/m5out/fs/proc/sys/net/core/somaxconn (inferred from:/proc/sys/net/core/somaxconn)

gem5 has encountered a segmentation fault!

- clone 系统调用失败

- 不能打开/proc/sys/net/core/somaxconn 这个虚拟文件

问题2 好解决,因为在open 系统调用中有对特殊文件的模拟,可以再添加我需要的就好,然后也是维护好对应的映射关系

int sim_fd = -1;

std::string used_path;

std::vector<std::string> special_paths =

{ "/proc/meminfo/", "/system/", "/platform/", "/etc/passwd",

"/proc/self/maps", "/dev/urandom",

"/sys/devices/system/cpu/online" };

for (auto entry : special_paths) {

if (startswith(path, entry)) {

sim_fd = OS::openSpecialFile(abs_path, p, tc);

used_path = abs_path;

}

}

但是这个测试程序才刚开始准备阶段,之后可能还有很多别的问题。

此外有的文章也提出了gem5 SE 模式下,对于多线程支持比较差,可能之后还是尝试修改这个测试程序吧。